CS261 - HW # 0 : Describe a parallel application

by: José Abell

Biography

I am a PhD student at UCDavis CompGeoMech since Sept 2011, working on our in-house high-performance FEM simulation system. I come from Chile, where I did my undergraduate studies in structural engineering, leading to a professional degree in the specialty, at Pontificia Universidad Católica de Chile. Then, I proceeded to do my MS at the same institution in Civil Engineering. My MS thesis was more focused into the hazard part of earthquake engineering.

My current research focuses on high-performance Earthquake-Soil-Structure interaction (ESSI) simulation. In a nutshell, it consists in modeling the effect of earthquake on structures from the earthquake source rupture process all the way up to a structure and its contents. The idea is to find out how and when a more accurate ESSI simulation yields different results from what is currently done in practice, and if this leads to safer and more economical designs.

Parallel, high-performance computing is an enabling technology in this endeavor and there are many opportunities along the process where leveraging parallelism is possible. Meshing, simulation, and post-processing are important examples of these.

Out of CS261 is would like to get insight into what it takes to get extreme-performance out of simulation software in different architectures. In particular, I would like to know more about the following topics:

- MPI usage patterns, how they come up, when to apply them, and tradeoffs.

- Shared memory parallel programming models (pthreads, OpenMP) and recurring software patterns.

- Parallel I/O techniques.

Application: ESSI Simulator

Introduction

The Real ESSI Simulator[1 and 2] is a system for simulation of ESSI problems developed at UC Davis. It consists of software, hardware (a parallel computer: ESSI simulator machine), and documentation covering theory, usage and examples for the system.

ESSI program is a parallel object-oriented finite element analysis (FEA) software for non-linear time domain analysis of ESSI systems. The program is written in C++, using several external libraries to accomplish its goals, most notably OpenMPI (message passing interface) is used to achieve parallelism. Other libraries used within ESSI include: PETSc for parallel solution of system of equations, METIS and Par-METIS for graph partitioning, [HDF5]phdf5 for parallel output. Input is controlled by a custom domain-specific language designed specifically for this program.

The software is meant to target a range of platforms from personal computers (desktop, laptop) to high-performance clusters and supercomputers.

Parallelism in FEA

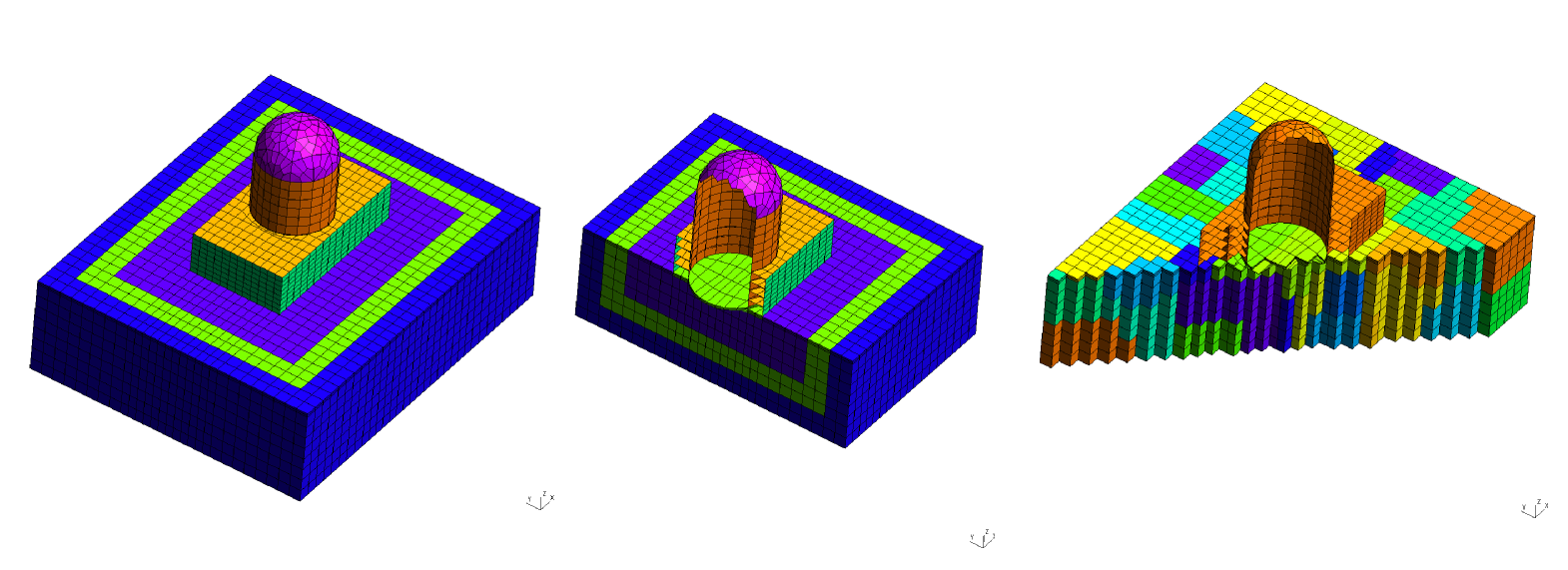

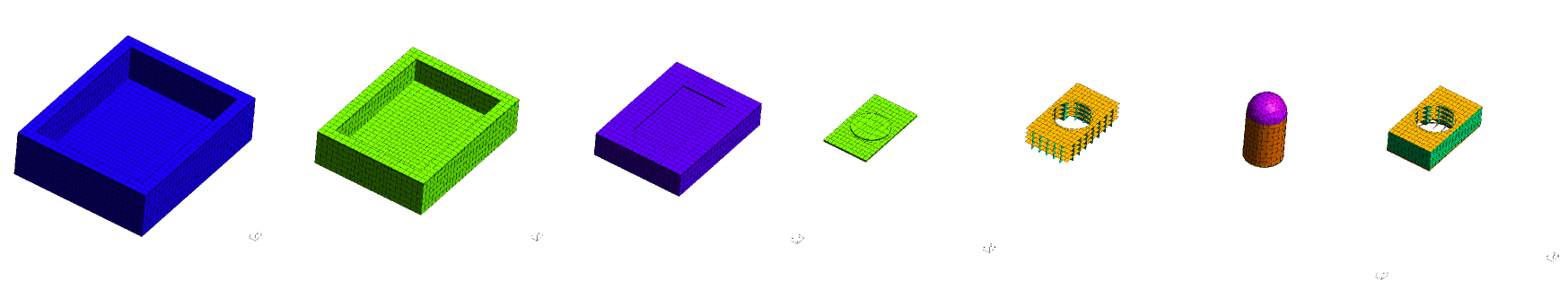

(Left and middle) Nuclear power plant model showing different areas, (right) domain decomposition of model.

Two main sources of parallelism can be identified in the context of nonlinear, dynamic finite element simulation: (i) system of equation solution and (ii) element-level constitutive integration. The first of these consists in the solution of a large linear system of equations (SOE) which arises from the discretization of the continuum problem (expressed as a set of coupled partial differential equations) in the spatial domain. The second source, comes from advancing the constitutive rate equations within each element once a global displacement increment is obtained from the solution of the SOE. This last part can account for a large part of the computational time for large problems and is embarrassingly parallel.

An additional source of parallelism in ESSI simulations is the storage of the large ammounts of output generated by these simulations. The philosophy adopted by the ESSI simulator is to independently store the information necessary to build the model and restart the simulation at any given point. This gives rise to possibly terabytes of data in even modest models, with the additional problem on how to handle this. In ESSI this is done by using a network filesystem (NFS) to create a virtual parallel unique disk and the HDF5 format to store the data. In a nutshell, HDF5 implements a format for storing scientific (array-oriented) data in a portable way, and also allowing parallel read/write (it uses MPI I/O under the hood).

A particularity of non-linear (plasticity based) FEA simulation is the unknown parts of the domain may plastify during simulations, leading to increased time spent integrating constitutive equations in that portion of the domain. What this implies is that, given an initial partition that balances the loading, this partition might become unbalanced if the domain plastifies. An adaptation of the dynamic domain decomposition method termed the "plastic domain decomposition"[3] or PDD, which achieves computational load re-balancing by repartitioning the element graph using computational time as one of the weighting factors.

Brief design description

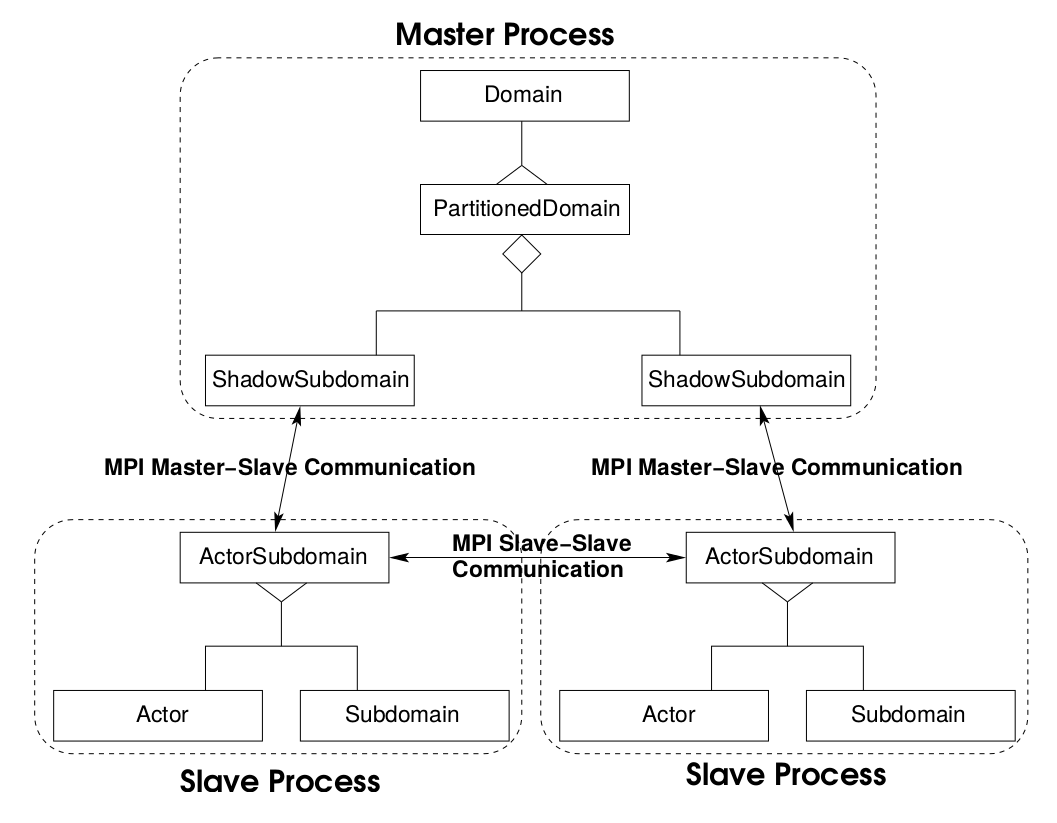

PDD is implemented in ESSI using the Actor/Shadow model of concurrency. Actors are autonomous and concurrently executing objects which execute asynchronously. Actors can create new actors and can send messages to other actors. The Actor model is an Object-Oriented version of message passing in which the Actors represent processes and the methods sent between Actors represent communications (verbatim from [4]).

Shadow-actors are the means in which actors communicate. Shadow-actors are objects which represent each running actor (remote) in a particular machine's local address space. Shadow actors are in charge of transmitting and receiving messages from other actors and effectively encapsulate all MPI calls. In the object-oriented design of ESSI this model allows reuse of code and modularity when programming using MPI.

Performance

As seen in [3], plastic-domain-decomposition method provides a viable way to re-balance a plastifying domain and has seen a reasonable scaling for low number of processes. A comprehensive scaling study on different platforms has not been yet performed.

Currently, ESSI's parallelization is exclusively done using MPI. This implies that for the lower end of the platforms we intend to cover (PCs, laptops) there is a performance hit due to improper use of shared memory architecture. This problem could be solved using a mixed design with threads for shared memory nodes and MPI for network.

Another big bottleneck currently present is that all input is loaded into the master process, partitioned and then distributed. This results in an unnecessary load to the main process at startup and imposes a cap on how big a model might be solved. In order to solve this issue the parser must be parallelized to some extent, so that different (pre-partitioned) model parts can be loaded into different processors on startup.

References

-

Boris Jeremić, Robert Roche-Rivera, Annie Kammerer, Nima Tafazzoli, Jose Abell M., Babak Kamranimoghaddam, Federico Pisano, ChangGyun Jeong and Benjamin Aldridge The NRC ESSI Simulator Program, Current Status in Proceedings of the Structural Mechanics in Reactor Technology (SMiRT) 2013 Conference, San Francisco, August 18-23, 2013.

-

Boris Jeremić, Guanzhou Jie, Matthias Preisig and Nima Tafazzoli. Time domain simulation of soil-foundation-structure interaction in non-uniform soils. Earthquake Engineering and Structural Dynamics, Volume 38, Issue 5, pp 699-718, 2009.

-

Boris Jeremić and Guanzhou Jie. Plastic Domain Decomposition Method for Parallel Elastic–Plastic Finite Element Computations in Geomechanics Report UCD CompGeoMech 03–2007.

-

Lecture Notes on Computational Geomechanics: Inelastic Finite Elements for Pressure Sensitive Materials, UC Davis, CompGeoMech group